Nvidia CEO Huang Talks about AI Chip Pricing and Emphasizes the Value of the Chinese Market

On March 20th, IT Home reported that on the morning of March 19th local time, Nvidia CEO Huang Renxun conducted interviews with global media. He mentioned that the global data center market is where Nvidia’s market opportunities lie. In response to questions about the pricing of their AI chips, Huang Renxun stated that they were merely trying to give everyone a sense of pricing rather than providing specific figures.

Here is a summary of the key points from the interview:

Regarding the pricing of Nvidia’s latest generation AI chip, Blackwell, which was speculated to be priced between $30,000 to $40,000, Huang Renxun responded by saying, “I am just trying to give everyone a sense of pricing for our products, without intending to give specific quotes. The price differences between different systems are significant depending on each customer’s needs. Nvidia does not sell chips; we sell data centers.”

Huang Renxun pointed out that the global data center market reached around $250 billion last year and is growing at a rate of 20% to 25%, which presents an opportunity for Nvidia. With a wide range of chip and software offerings, Nvidia can obtain substantial revenue from global investments in data center equipment.

Furthermore, he emphasized the importance of the Chinese market. “We are doing our best to maximize Nvidia’s business in China. We have introduced the L20 and H20 chips specifically for the Chinese market, meeting their requirements,” said Huang Renxun. He also mentioned that many components in Nvidia’s chips are sourced from China, highlighting the difficulty of disrupting the globalized supply chain.

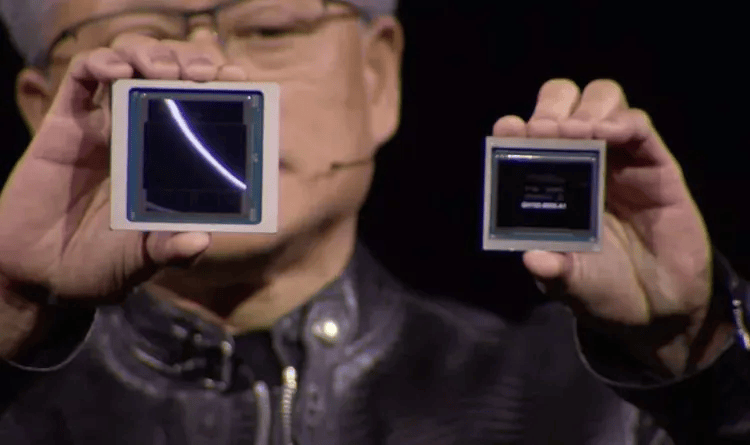

In another report by IT Home, it was mentioned that Nvidia officially launched the AI accelerator card, Blackwell GB200, at the GTC 2024 developer conference and plans to ship it later this year. Huang Renxun stated that Blackwell’s AI performance can reach 20 petaflops, compared to H100’s 4 petaflops. The additional processing power will enable AI companies to train larger and more complex models.