According to a report by The Information, citing insiders, American tech giant Microsoft and ChatGPT creator OpenAI are currently negotiating details for a groundbreaking global data center project, with a budget that could reach up to $100 billion. This ambitious plan includes what is temporarily named the “Stargate” AI supercomputer, marking the most significant AI supercomputing infrastructure undertaking planned by these leading AI entities over the next six years.

Undoubtedly, this colossal AI supercomputer, equipped with “millions” of computing cores—AI chips, aims to power even more potent GPT models and revolutionary AI products beyond ChatGPT and the Sora video generator, enhancing OpenAI’s computing capabilities.

Insiders reveal that the planned “Stargate” AI supercomputer will have the capacity to host millions of the highest-performing AI chips, significantly boosting OpenAI’s AI computing framework. Since signing their first comprehensive cooperation agreement in 2019, Microsoft has already pledged at least $13 billion in funding to OpenAI.

Currently, as OpenAI’s largest shareholder, Microsoft’s investments are among the most significant in history. The tech behemoth is likely to continue providing substantial financial support for the project, integrating it into the “five-phase plan” both companies have already set in motion.

Inside sources indicate that Microsoft’s commitment to this project partially hinges on OpenAI significantly enhancing its AI application products, especially the capabilities of its subsequent GPT models.

NVIDIA, the “king” of AI supercomputing and chip sales, will play a critical role, although chips from other manufacturers and Microsoft’s proprietary AI chips will also be crucial.

The ongoing collaboration between Microsoft and OpenAI is part of a five-phase plan. Currently, the two are developing a comparatively smaller AI supercomputer—part of the fourth phase, expected to cost $10 billion and be operational by 2026 in Pleasant Prairie, Wisconsin. Microsoft has prioritized deploying NVIDIA’s AI chips extensively in this AI supercomputing project, which is poised to cost approximately $10 billion.

The “Stargate” AI supercomputer that Microsoft and OpenAI are planning is expected to continue purchasing NVIDIA AI chips in large quantities, including the H100, H200, and the latest Blackwell architecture chips. However, AI chips from other manufacturers and Microsoft’s in-house AI chips will also play a vital role.

NVIDIA, globally renowned in the AI data center sphere, has witnessed a surge in demand for its AI chips like the H100 for AI training and inference, leading to exceptional performance outcomes for four consecutive quarters.

At NVIDIA’s March GTC conference, the company launched a new AI chip based on the Blackwell architecture. The B200 could deliver a total AI computing power of 20PFlops, far surpassing the previous generation H100’s 4PFLOPs— a performance increase of five times, enabling the creation of more complex large language models (LLMs).

NVIDIA’s latest GB200 supercomputing system represents a unique class of computing systems, significantly improving working load performance for LLM inference by 30 times while reducing costs and energy consumption by roughly 25 times compared to the previous Hopper architecture.

However, media leaks suggest that the proposed “Stargate” AI supercomputer’s chip configuration will be “more diversified,” including high-performance AI chips from other manufacturers and Microsoft’s proprietary AI chips, alongside NVIDIA’s offerings.

As global demand for AI chips skyrockets, NVIDIA stands as the undisputed “AI chip leader,” while Microsoft and OpenAI strive to lessen their reliance on NVIDIA’s chips. OpenAI CEO Sam Altman has expressed the desire to establish a global chip infrastructure supply system to address this issue and ensure a stable supply of “Stargate” AI chips.

The design of the “Stargate” AI supercomputer will allow Microsoft and OpenAI to utilize performance advantages of AI chips developed by companies other than NVIDIA, including Microsoft’s recent Azure Maia AI server acceleration chip, reducing dependence on third-party chips.

Despite the “Stargate” project being significantly less expensive than previous AI supercomputing endeavors, adding “Stargate” to the “Microsoft-OpenAI supercomputing” plan could push costs beyond $115 billion, more than three times Microsoft’s expenditure last year on AI servers, new constructions, and other infrastructure.

Media reports predict that “Stargate” might be fully launched by 2028, with plans to expand the project’s scale and scope by 2030. Discussions have included alternative energy supply systems for the supercomputer, such as nuclear power, given the project’s potential massive electricity need of at least 5000 megawatts—enough to power several of the world’s large data centers.

The surge in demand for AI chips is just beginning.

The current and future demand for AI chips is incredibly robust, signaling the possible onset of an explosive growth phase for AI chips.

Intel CEO Pat Gelsinger and Sam Altman, known as the “Father of ChatGPT,” recently discussed the world’s increasing need for more AI chips and greater chip production capacity.

As part of the so-called “Siliconomy” wave, Gelsinger highlighted Intel’s plan to regain its leadership position in chip manufacturing by capitalizing on the surging demand for AI chips. He expressed Intel’s willingness to manufacture chips for any company, including longstanding competitors NVIDIA and AMD, predicting that by 2030, Intel’s chip foundry business could become the world’s second largest, closely following the industry leader TSMC.

The “father of TSMC,” Morris Chang, noted the immense global demand for chip manufacturing capacity, including AI chips, during the opening ceremony of TSMC’s first factory in Japan.

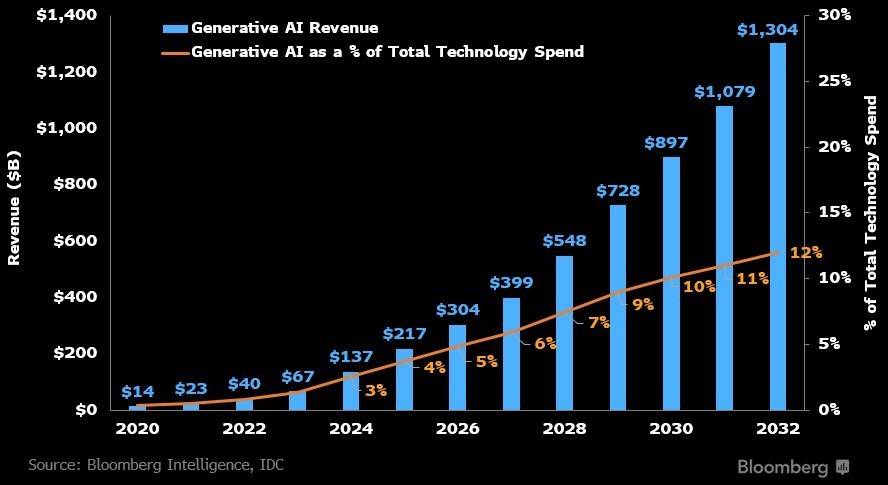

The proliferation of consumer-focused generative AI applications like ChatGPT and Sora has sparked a global rush to invest in AI technology, potentially ushering in a decade-long era of AI prosperity. According to a report led by Mandeep Singh from Bloomberg Industry Research, the generative AI market is expected to grow from last year’s $40 billion to $1.3 trillion by 2032, marking a 32-fold increase over the decade with a compound annual growth rate of 42%.

During NVIDIA’s recent earnings call, Jensen Huang remarked that the remainder of the year will continue to see an insatiable demand for NVIDIA’s latest products. He noted that despite increasing supply, the demand shows no signs of slowing down. “Generative AI has kickstarted a new investment cycle,” Huang stated, predicting a doubling in data center infrastructure scale within five years, representing a yearly market opportunity worth hundreds of billions of dollars.

Gartner predicts the AI chip market will grow by 25.6% from the previous year, reaching $67.1 billion by 2024, with expectations to more than double by 2027, reaching $119.4 billion.

A report from Precedence Research forecasts that the AI chip market, including CPUs, GPUs, ASICs, and FPGAs, will surge from approximately $21.9 billion in 2023 to $227.4 billion by 2032, with a nearly 30% compound annual growth rate between 2023-2032.

Source: Zhitong Finance