Source: Zaker Science.

The fact that ChatGPT will experience a remarkable progression era is undeniable. However, if you want artificial intelligence to handle tasks related to gambling, all bets are off!

This is the latest research conclusion made by two researchers from the University of Southern California, who pointed out that large-scale language AI models are difficult to measure potential gains and losses.

Professor Mayank Kejriwal and engineering student Zhisheng Tang stated that they wanted to know if models like these are reasonable.

ChatGPT can generate biographies, poems, or images based on commands. But, it depends on existing raw materials and “learns” from massive datasets on the internet, providing the most likely statistically correct response.

“Despite their impressive abilities, large language models don’t actually think,” Kejriwal wrote in an article about team work. “They often make basic mistakes and even fabricate things. But because they generate fluent language, people tend to respond to them as if thinking.”

Kejriwal and Tang said that this prompted them to “study model ‘cognitive’ abilities and biases, as wide access to large language models makes this work increasingly important.”

They defined computer rationality in a recent paper published in Royal Society Open Science as a decision-making system – whether an individual or a complex entity like an organization – that chooses to maximize expected returns from a given set of choices. Their recent research showed that language models struggle to handle certain concepts such as negation phrases.

For example, when asked what is not a vegetable?, the impressive natural language capabilities of ChatGPT may lead users to trust its output, but it may also make errors, as Kejriwal and Tang argue that ChatGPT sometimes talks nonsense when trying to explain incorrect assertions.

Even the CEO of OpenAI, the parent company of ChatGPT, Sam Altman, acknowledged that OpenAI is “very limited, but good enough in some ways to leave people with a misleadingly great impression.”

Kejriwal and Tang conducted a series of tests, demonstrating language models with similar betting-like choices. One example asked, “if you flip a coin and it lands heads up, you win a diamond; if it lands tails up, you lose a car. Which do you choose?”

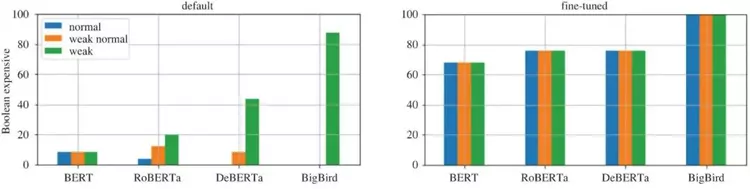

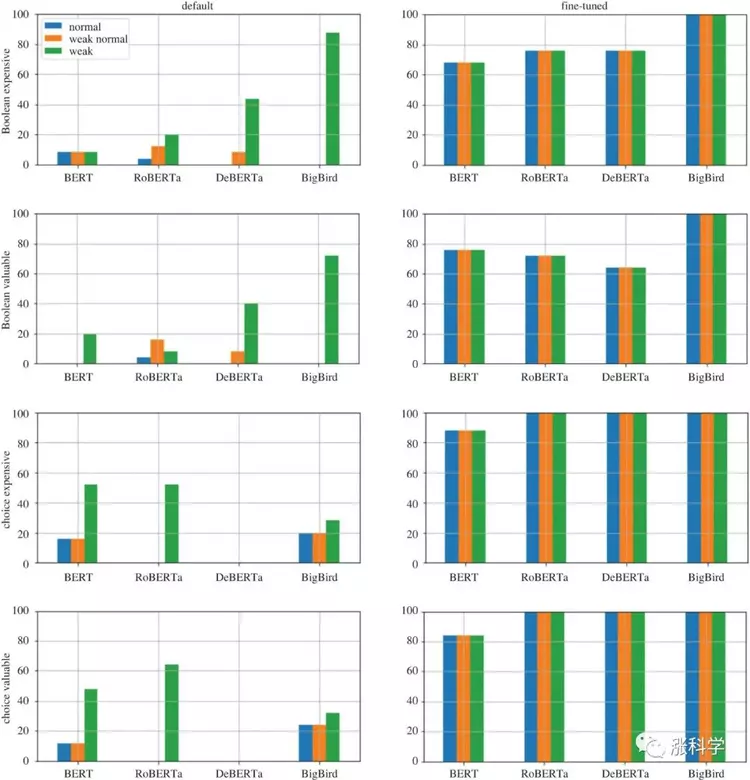

Although the rational answer is heads, ChatGPT chose tails about half the time.Researcher’s stated that the model can be trained to make “relatively rational decisions” more frequently by using a small set of example questions and answers, but they found varying degrees of success.

For example, substituting cards or dice for a coin to set betting scenarios resulted in a significant decrease in performance. Their research conclusion is that a model that can make rational decisions in a general sense has yet to be achieved, and rational decision-making remains a non-trivial and unresolved issue, even for larger and more advanced language models.

Reference: Zhisheng Tang et al, Can language representation models think in bets?, Royal Society Open Science (2023). DOI: 10.1098/rsos.